背景

最近做业务需要了解什么是交错思考,以前我只知道thinking机制。

本质上就是在模型正式输出前,让其输出。内部有具体的思考活动。

但是这种方式,在下一次访问llm的时候,一般会把thinking内容去除,只发送实际内容给llm。

什么是交互思考

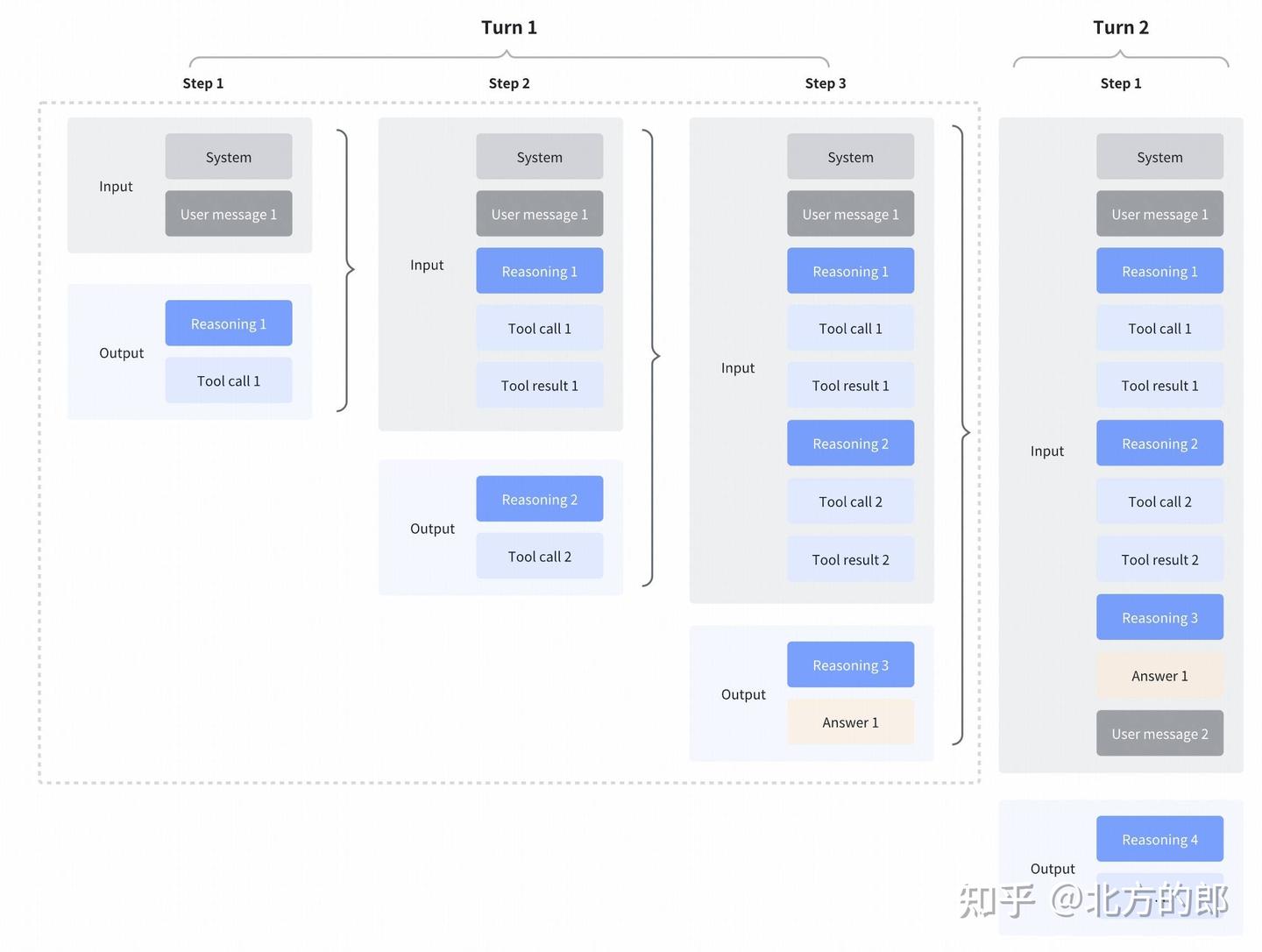

参考这张图片,其实还是挺清晰的:

这是 GLM-4.7 最具标志性的设计之一。

在多轮对话或长任务执行中,模型可以保留前序推理结构与中间结论,而不是每一轮都“从零开始理解上下文”。这意味着:

从数据上来说,就是每次输出的reasoningDetails保留下来,把thinking的中间内容保留了。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

{

"content": [

{

"text": ",从1输出到100,每输出一个数字换一下行",

"type": "text"

}

],

"type": "user",

"updateTime": "2026-01-12T12:10:18.300+0000"

},

{

"content": [

{

"reasoningContent": "The user is asking me to output numbers from 1 to 100, with each number on a new line. This is a simple programming task that I can help with. Let me create a simple script to do this.\n\nThe user wrote \"ultrathink\" which suggests they want me to think carefully about this, but this is a straightforward task.\n\nI should create a simple script that prints numbers 1 to 100, each on a new line. I'll use Python since it's a common language and very straightforward for this task.",

"reasoningDetails": [

{

"format": "MiniMax-response-v1",

"id": "reasoning-text-1",

"index": 0,

"text": " Python since it's a common language and very straightforward for this task.",

"type": "reasoning.text"

}

],

"text": "我来为你创建一个输出1到100数字的程序,每个数字占一行:\n",

"type": "text"

},

{

"toolCallId": "111",

"toolName": "edit_file",

"toolParams": "{\"target_file\": \"print_numbers.py\", \"instructions\": \"Create a Python script that prints numbers from 1 to 100, each on a new line\", \"code_edit\": \"# 输出1到100的数字,每个数字占一行\\nfor i in range(1, 101):\\n print(i)\"}",

"type": "tool_use"

}

],

},

|

ref

(5 封私信 / 11 条消息) 为什么 2025 的基模公司都押注 Interleaved Thinking? - 知乎